What is the idea behind deep learning algorithms?

While deep learning algorithms have the special feature of self-learning representations, they mirror the way the brain computes information. The algorithms are mostly used in training unknown elements in the input distribution to extract features, group objects, and discover useful data patterns.

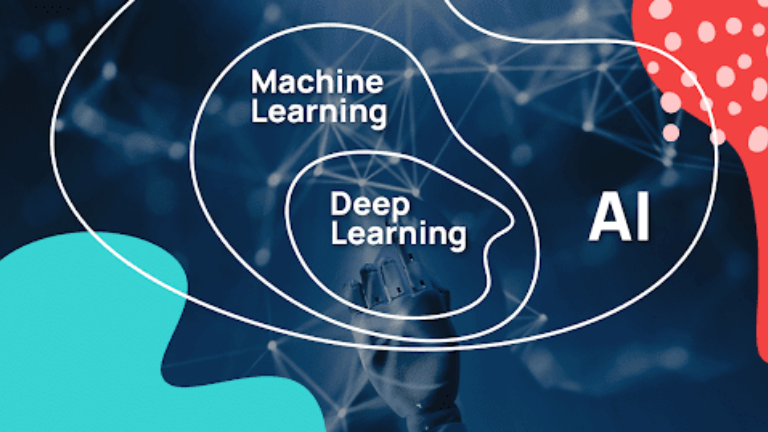

Deep learning is a subset of machine learning that focuses on using neural networks with many layers (hence “deep”) to model and understand complex patterns in data. The key idea behind deep learning algorithms is to automatically learn representations of data at multiple levels of abstraction, enabling the model to capture intricate structures and relationships within the data. Here’s a more detailed look at the foundational concepts and principles.

1. Neural Networks

At the core of deep learning are artificial neural networks (ANNs), which draw inspiration from the structure and function of the human brain. Within an ANN, interconnected layers of nodes (neurons) perform simple computations. Consequently, these layers contribute to the network’s ability to learn and process complex information.

Input Layer: Receives the raw data.

Hidden Layers: intermediate layers that process inputs from the previous layer using weighted connections. Deep learning networks have many hidden layers.

Output Layer: Produces the final prediction or classification.

2. Layers and Abstraction

Deep learning models stack multiple layers, each learning a different level of abstraction:

Lower Layers: Capture simple features like edges in an image.

Intermediate Layers: Detect more complex features like shapes or textures.

Higher Layers: Recognize high-level patterns like objects or faces.

3. Backpropagation and Gradient Descent

Training a deep neural network involves adjusting the weights of connections based on the error of the network’s predictions. This process is done through.

Forward Pass: Input data is passed through the network, producing an output.

Loss Function: Measures the difference between the predicted output and the actual target.

Backward Pass (Backpropagation): The error is propagated backward through the network, and weights are updated using gradient descent to minimize the loss function.

4. Activation Functions

Activation functions introduce non-linearity into neural networks, enabling them to learn and model complex patterns in data. Common activation functions include the following:

Sigmoid: Squashes the output to a range between 0 and 1.

Tanh maps input to a range between -1 and 1.

ReLU (Rectified Linear Unit): Introduces non-linearity by outputting zero for negative inputs and the input itself for positive inputs.

5. Regularization Techniques

To prevent overfitting (where the model performs well on training data but poorly on new data), various regularization techniques are used.

Dropout: Randomly “drops” units (neurons) during training, forcing the network to learn redundant representations.

L2 Regularization: Adds a penalty for large weights to the loss function to encourage smaller weights.

6. Optimization Algorithms

Beyond basic gradient descent, several optimization algorithms improve training efficiency and convergence:

Stochastic Gradient Descent (SGD): updates weights using a small batch of data, reducing computation time.

Adam: Combines the advantages of two other extensions of SGD, providing adaptive learning rates for each parameter.

7. Convolutional Neural Networks (CNNs)

Used primarily for image data, CNNs leverage convolutional layers to automatically and adaptively learn spatial hierarchies of features. Moreover, convolutional neural networks (CNNs) have been highly effective in tasks such as image classification, object detection, and image segmentation.

Convolutional Layers: Apply convolutional filters to capture local patterns.

Pooling Layers: Reduce the dimensionality, retaining essential information while reducing computational load.

Fully Connected Layers: Integrate the high-level features for final classification.

8. Recurrent Neural Networks (RNNs)

Recurrent neural networks (RNNs) are specialized for handling sequential data, such as time series or natural language, by maintaining a hidden state that captures information from previous inputs.

Variants include:

LSTM (Long Short-Term Memory): Addresses the vanishing gradient problem in standard RNNs, effectively capturing long-term dependencies.

GRU (Gated Recurrent Unit): A simpler alternative to LSTM with similar performance.

9. Transformers

Transformers have revolutionized natural language processing (NLP) with their ability to handle sequential data more efficiently than RNNs. They rely on self-attention mechanisms to weigh the importance of different parts of the input sequence.

10. Unsupervised and Semi-Supervised Learning

While traditional deep learning relies on labeled data, unsupervised and semi-supervised approaches aim to learn from unlabeled data.

Autoencoders: Learn efficient representations by reconstructing the input data.

Generative Adversarial Networks (GANs): Consisting of two networks (generator and discriminator) competing against each other, this framework leads to the generation of realistic data. As a result, generative adversarial networks (GANs) have demonstrated remarkable capabilities in tasks such as image generation, style transfer, and data augmentation.

11.Attention Mechanisms

Self-Attention: Self-attention allows a model to weigh the importance of different parts of the input sequence for each token in the sequence. Consequently, this enables more efficient handling of long-range dependencies, enhancing the model’s ability to capture intricate relationships within the data.

Transformers: Building on self-attention, transformers have become the backbone of many state-of-the-art models in NLP and beyond. Moreover, the transformer architecture allows for parallel processing of sequence data, leading to faster training times and better performance.

12.Meta-Learning

Learning to Learn: Meta-learning focuses on designing models that can learn new tasks quickly with minimal data, often by leveraging prior knowledge from similar tasks.

Model-Agnostic Meta-Learning (MAML): Train the model to adapt to new tasks with just a few gradient updates.

13.Graph Neural Networks (GNNs)

14.Reinforcement Learning (RL)

Agent-Environment Interaction: In RL, an agent learns to make decisions by interacting with an environment to maximize cumulative reward.

Deep Reinforcement Learning: Combining deep learning with RL involves neural networks approximating value functions or policies. Notable examples include AlphaGo and OpenAI’s Dota 2 bot. Consequently, this integration enhances the ability of RL algorithms to tackle complex tasks by leveraging the representational power of deep learning models.

15.Few-Shot and Zero-Shot Learning

Few-Shot Learning: Train models to recognize new classes with only a few examples.

Zero-Shot Learning: Models are capable of recognizing new classes without having seen any examples during training, often using semantic information like word vectors.

Conclusion

The idea behind deep learning algorithms is to build and train neural networks with multiple layers that can automatically learn complex patterns and representations from large datasets. Furthermore, innovations like attention mechanisms, meta-learning, graph neural networks, and generative models have significantly expanded the capabilities and applications of deep learning. Consequently, these advancements have enabled more sophisticated and versatile AI systems across various domains.